Standards and Requirements for Data Warehouses

By Lina Ferraiolo, Principal Consultant at Kirey Group

Data Lineage is a data traceability approach for implementing a robust Information Governance framework. For years a large number of regulations have insisted on the importance of data traceability; for Data Warehouses, the 285 standard suggests a documentation of the procedures of extraction, transformation, control, loading in centralized archives and exploitation of data, in order to allow verification of Data Quality; documents that regulate the calculation of risk indicators and their aggregations (575/2013 and BCBS 239) also require the presence of materials that express the history, processing and location of the data so that they can be easily traced ("data traceability").

The importance of the Data Lineage for the enhancement of data governance

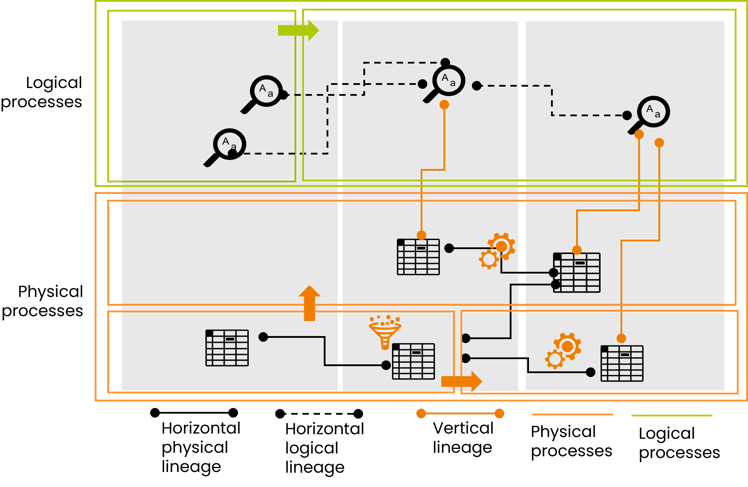

Data lineage is the Source -Target relation between data in their technical or logical sense, through the government of the existing connection between the physical plan of the data and the level of business. Through this technique, it is possible to build a control model that can determine efficiently and sustainably the qualitative level of critical and relevant information for a business process.

The lineage is undoubtedly one of the concepts that most contributed to a change of perspective in the data governance context, evolving the primary need to respond to regulatory compliance, towards a wider organization of services based on the relations defined between Business and Technical dictionaries. For example, thanks to data lineage, the evaluation of Impact Analysis in Chance Management processes becomes faster; the understanding of processes becomes clearer; new KPI calculation algorithms can be built, based on what was collected during data construction.

The focus on the theme of Data Lineage is getting stronger because it allows building an appropriate approach to data traceability and evolution both at the process level (business level) and at the application level (technology level). In addition, the data lineage is essential for the implementation of a robust Information Governance framework, necessary not only to improve the management, aggregation and use of information, but also to provide valid tools for the analysis and the valorization of data.

The horizontal logical lineage, addressed by successive approximations, can be reasonably easier to census and to survey, as it expresses the passage of information from one process to another or the application of a rule of calculation or aggregation. The horizontal physical lineage, on the other hand, presents far more challenging difficulties. This complexity becomes deeper for areas without documentation, for which it is necessary to inspect the data processing source code.

There are market tools that can automatically carry out such inspections, but experience suggests not to fully approve this practice, because it is not possible to scan the entire code operating in a company providing the expected results.

Data Governance by Design can provide operational procedures and precise rules for new developments, and frame new activities by carefully monitoring the preparation of the necessary steps to set and maintain updated the lineage report. However, it remains to address the issue of the management of the past, that is, everything that has been produced during the operating life of a company. Finally, the approach to minor business areas to be included in a path of Data Governance may not require a deep recognition in terms of data lineage.

An approach to Data Governance through Integrating Data Modeling

With these premises it is necessary, in our opinion, to draw on the expertise in the field of data modelling for Data Warehouse and Data Mart and combine them with the need for a centralized repository that contemplates the historical relationships and depths that a data governance system needs, keeping the focus on the share relative to logical and physical dictionaries.

It is important to keep the focus on elementary data, and at the same time to reflect on a model that places the elementary data as an atomic element of a more complex system. The result proposes a drawing of the elementary data as an element of fine granularity within a hierarchy; therefore, means identifying the elements at the service of your data organization that reconstruct and generate orderly levels of aggregation in the direction, for example, of Data Systems, or Management Applications.

The extension of the Source -Target concept between the elements of the same hierarchical level enriches the model; once it has been detected it constitutes a first approximation, a granularity less fine, but descriptive, of the assignment of the data lineage between fields.

It may be sufficient in some cases to be less precise due to lack of documentation or lack of resources, but it is always necessary to have an awareness of the path and data handling, to respond to regulatory requests, and to lay the foundations for the improvement of analysis and exploitation of data.